In an unannounced replace to its utilization coverage, OpenAI has opened the door to army purposes of its applied sciences. Whereas the coverage beforehand prohibited use of its merchandise for the needs of “army and warfare,” however that language has now disappeared, and OpenAI didn’t deny that it was now open to army makes use of.

The Intercept first seen the change, which seems to have gone reside on January tenth.

Unannounced modifications to coverage wording occur pretty ceaselessly in tech because the merchandise they govern the usage of evolve and alter, and OpenAI is clearly no completely different. Actually, the corporate’s current announcement that its user-customizable GPTs could be rolling out publicly alongside a vaguely articulated monetization coverage doubtless necessitated some modifications.

However the change to the no-military coverage can hardly be a consequence of this specific new product. Nor can it credibly be claimed that the exclusion of “army and warfare” is simply “clearer” or “extra readable,” as a press release from OpenAI relating to the replace does. It’s a substantive, consequential change of coverage, not a restatement of the identical coverage.

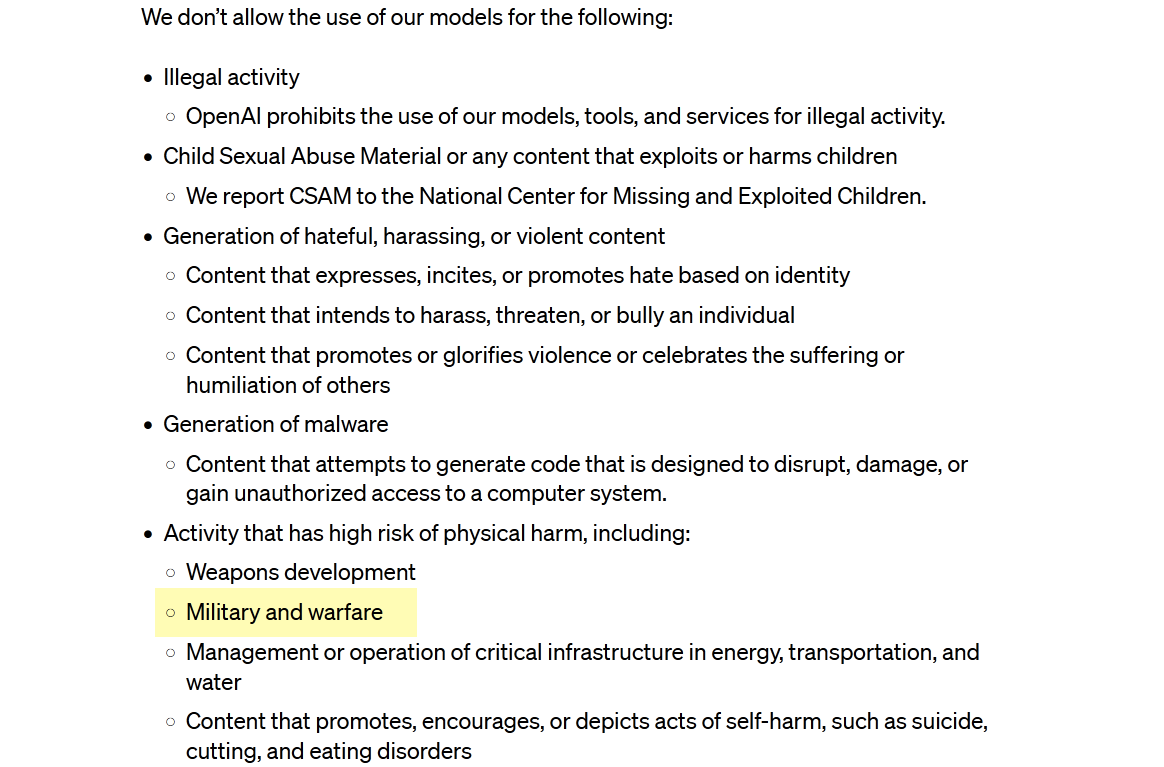

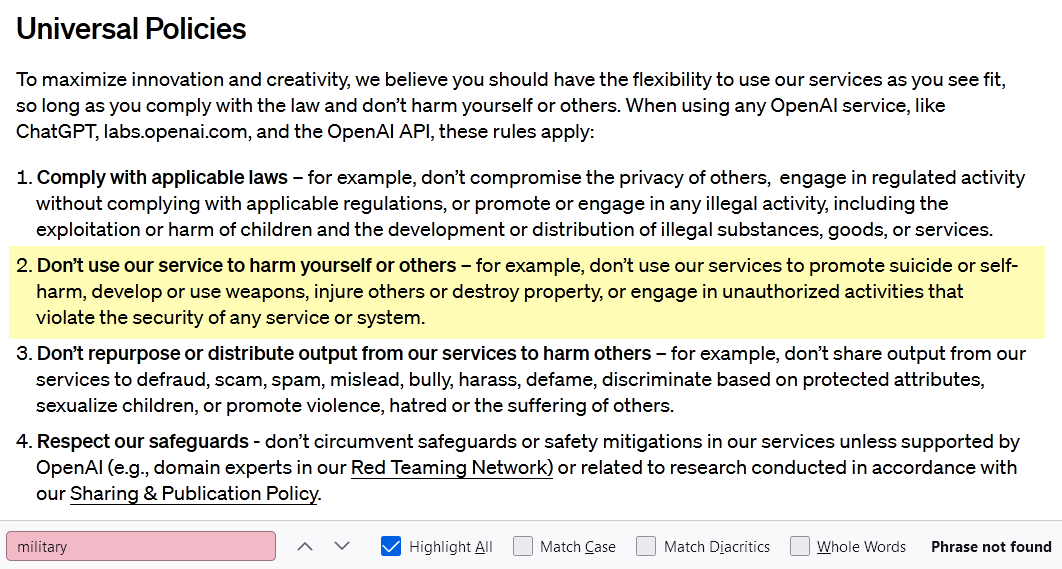

You possibly can learn the present utilization coverage right here, and the outdated one right here. Listed below are screenshots with the related parts highlighted:

Earlier than the coverage change.

After the coverage change.

Clearly the entire thing has been rewritten, although whether or not it’s extra readable or not is extra a matter of style than something. I occur to suppose a bulleted checklist of clearly disallowed practices is extra readable than the extra normal tips they’ve been changed with. However the coverage writers at OpenAI clearly suppose in any other case, and if this provides extra latitude for them to interpret favorably or disfavorably a observe hitherto outright disallowed, that’s merely a pleasing aspect impact.

Although, as OpenAI consultant Niko Felix defined, there’s nonetheless a blanket prohibition on growing and utilizing weapons, you’ll be able to see that it was initially and individually listed from “army and warfare.” In spite of everything, the army does greater than make weapons, and weapons are made by others than the army.

And it’s exactly the place these classes don’t overlap that I might speculate OpenAI is inspecting new enterprise alternatives. Not the whole lot the Protection Division does is strictly warfare-related; as any educational, engineer, or politician is aware of, the army institution is deeply concerned in all types of fundamental analysis, funding, small enterprise funds, and infrastructure help.

OpenAI’s GPT platforms might be of nice use to, say, military engineers trying to summarize many years of documentation of a area’s water infrastructure. It’s a real conundrum at many corporations the way to outline and navigate their relationship with authorities and army cash. Google’s “Mission Maven” famously took one step too far, although few appeared to be as bothered by the multi-billion-dollar JEDI cloud contract. It is perhaps OK for an instructional researcher on an Air Power Analysis lab grant to make use of GPT-4, however not a researcher contained in the AFRL engaged on the identical challenge. The place do you draw the road? Even a strict “no army” coverage has to cease after a couple of removes.

That mentioned, the full elimination of “army and warfare” from OpenAI’s prohibited makes use of means that the corporate is, on the very least, open to serving army prospects. I requested the corporate to verify or deny that this was the case, warning them that the language of the brand new coverage made it clear that something however a denial could be interpreted as a affirmation.

As of this writing they haven’t responded. I’ll replace this publish if I hear again.